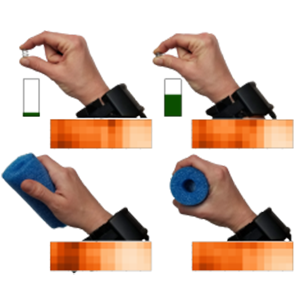

We demonstrate rich inferences about unaugmented everyday objects and hand object interactions by measuring minute skin surface deformations at the wrist using a sensing technique based on capacitance. The wristband prototype infers muscle and tendon tension, pose, and motion, which we then map to force (9 users, 13.66 +/- 9.84 N regression error on classes 0--49.1 N), grasp (9 users, 81 +/- 7 % classification accuracy on 6 grasps), and continuous interaction (10 users, 99 +/- 1 % discrimination accuracy between 6 interactions, 89--97 % accuracy on 3 states within each interaction) using basic machine learning models. We wrapped these sensing capabilities into a proof-of-concept end-to-end system, Ubiquitous Controls, that enables virtual range inputs by sensing continuous interactions with unaugmented objects. Eight users leveraged our system to control UI widgets (like sliders and dials) with object interactions (like "cutting with scissors" and "squeezing a ball"). Finally, we discuss the implications and opportunities of using hands as a ubiquitous sensor of our surroundings.